- AI in Lab Coat

- Posts

- Why Emergency Rooms Are Missing Critical Suicide Clues in Children

Why Emergency Rooms Are Missing Critical Suicide Clues in Children

plus: Nurses Say AI in Hospitals Feels More Like Surveillance Than Support

Happy Friday! It’s November 1st.

As we enter November, black bears burn an impressive 4,000 calories a day while hibernating—about as much as four full Thanksgiving meals. Living entirely off body fat, they lose up to 33% of their weight yet keep all their muscle and bone strength intact.

Nature’s way of sleeping strong!

Our picks for the week:

Featured Research: Why Emergency Rooms Are Missing Critical Suicide Clues in Children

Perspectives: Nurses Say AI in Hospitals Feels More Like Surveillance Than Support

Product Pipeline: TIME Names Sepsis ImmunoScore™ as Top Invention for AI-Enhanced Sepsis Prediction and Diagnosis

Policy & Ethics: One Year In: How the Biden-Harris Administration is Shaping Safe and Ethical AI Use

FEATURED RESEARCH

Why Emergency Rooms Are Missing Critical Suicide Clues in Children

A recent UCLA study shines a harsh light on the blind spots in our emergency rooms when it comes to detecting suicide risks in children and teens.

Using electronic health records from almost 3,000 young patients in Southern California, researchers found that standard detection methods frequently miss youth struggling with suicidal thoughts, especially among Black, Hispanic, male, and younger patients.

The gap in detection: Clinicians typically rely on a patient’s chief complaint or diagnostic codes. But these methods can’t catch the full picture.

For instance, only 59% of cases among children aged 6-9 were detected, with rates improving to 69% for ages 10-12 and 83% for older teens—but still leaving many at risk. Detection also lagged for Black and Hispanic youth compared to their White peers.

Often, underlying issues like depression or trauma are coded instead of suicidality, leaving a dangerous blind spot. Dr. Juliet Edgcomb, the study’s lead author, highlights the issue: “Suicide is transdiagnostic,” meaning it doesn’t always align neatly with any one diagnosis.

An AI-Driven Solution: By adding more data to their detection approach, like patient notes and lab results, the research team boosted accuracy significantly.

This advanced method closed detection gaps across age, race, and gender, bringing detection rates for Black and Hispanic youth closer to those of White youth.

With suicide rates rising—especially an 8% annual increase in preteen suicide deaths from 2008 to 2022—this is one tech upgrade our healthcare system can’t afford to ignore.

For more details: Full Article

Brain Booster

Which of the following mixtures is scientifically proven to effectively neutralize the smell of skunk spray? |

Select the right answer! (See explanation below)

Opinion and Perspectives

AI IN HEALTHCARE

Nurses Say AI in Hospitals Feels More Like Surveillance Than Support

Some nurses see AI in hospitals as more surveillance than support. Jamie Brown, President of the Michigan Nurses Association, raised concerns at a recent U.S. Department of Labor hearing, saying, “Many of these technologies are marketed as tools to improve patient care, but in fact, they track the activities of healthcare workers like me and frequently violate our privacy and the privacy of our patients.”

Surveillance vs. support: While AI is billed as a way to enhance patient care, nurses argue it often feels like a way to monitor staff.

Brown explained that hospitals sometimes use AI to make staffing and care decisions focused more on cost-cutting than actual needs.

“The data collected is then being used by algorithmic management systems to make unreasonable and inaccurate decisions about patient care and staffing,” she noted.

Hospitals push back: The Michigan Health & Hospital Association disagrees, stating AI helps reduce nurse burnout by streamlining tasks, not replacing patient care.

They emphasize that AI should support the “human touch,” with a focus on safety and quality.

Calls for transparency: Union nurses have pushed for transparency on AI, but Brown insists it’s not enough. Hospitals “don’t always follow the rules and tell us,” she said, calling for clear guidance to protect both worker and patient privacy.

For more details: Full Article

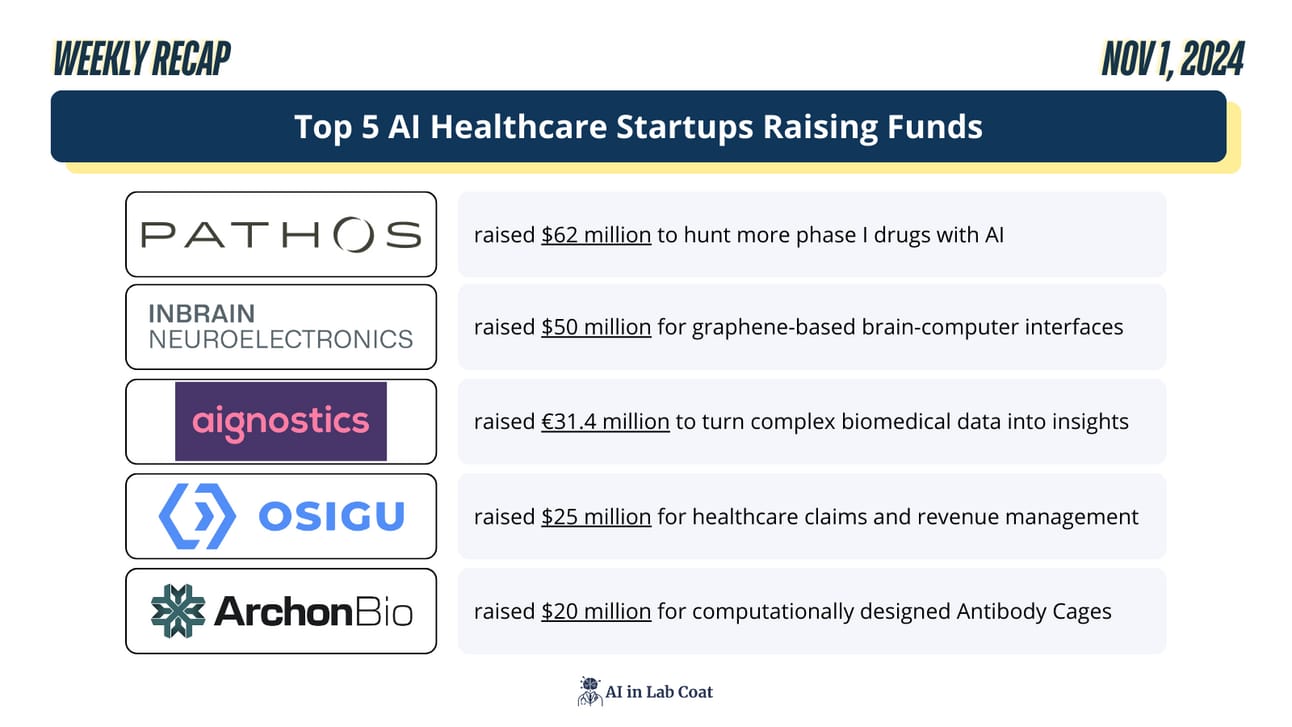

Top Funded Startups

Product Pipeline

TOP INVENTION OF 2024

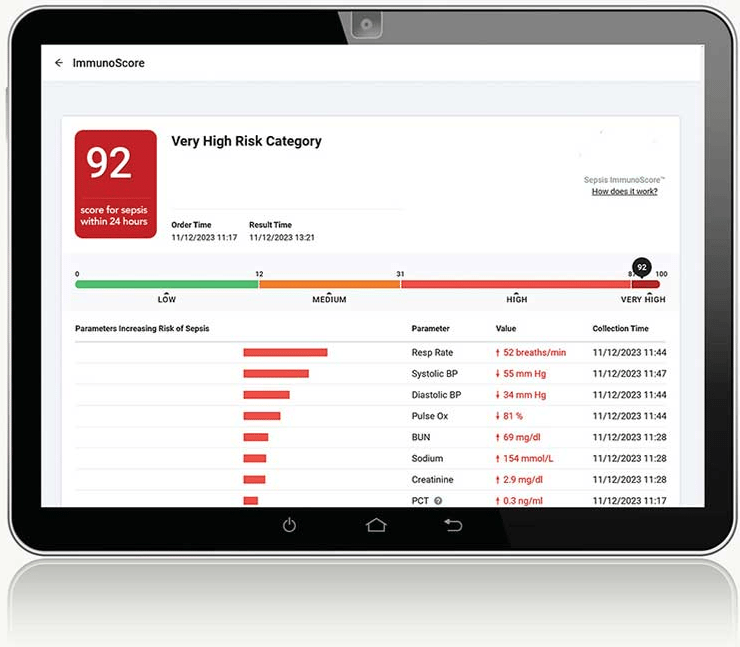

TIME Names Sepsis ImmunoScore™ as Top Invention for AI-Enhanced Sepsis Prediction and Diagnosis

The Sepsis ImmunoScore™ by Prenosis is an FDA-authorized AI-driven diagnostic tool that enhances the detection and management of sepsis, a severe and often fatal condition.

Recognized as one of TIME's Best Inventions of 2024, this tool leverages Prenosis’ Immunix™ platform and a vast biobank to predict and diagnose sepsis with high precision, empowering clinicians to make timely, data-informed decisions.

Through partnerships with over ten U.S. hospitals, the Sepsis ImmunoScore™ has demonstrated its potential to personalize treatment in acute care, aiming to significantly reduce sepsis-related mortality by improving diagnostic speed and accuracy.

For more details: Full Article

Policy and Ethics

AI POLICY

One Year In: How the Biden-Harris Administration is Shaping Safe and Ethical AI Use

In the year since President Biden’s AI-focused Executive Order, the administration has implemented over 100 actions prioritizing AI safety, security, and ethical use across sectors.

Key Achievements:

Safety and Security: Federal agencies mandated reporting from AI developers on safety testing and established the U.S. AI Safety Institute to assess high-stakes AI models.

Healthcare: HHS set transparency requirements for AI in health IT, addressing risks in predictive algorithms and enforcing anti-discrimination under the ACA.

Worker and Consumer Protections: The DOL issued AI workplace guidelines to protect workers’ rights, while the Department of Education advised on safe AI use in education.

Global Leadership: The U.S. led international efforts, passing a UN resolution on AI safety and joining Europe’s AI and Human Rights Convention.

This robust framework aims to responsibly harness AI while addressing privacy, security, and equity concerns across all sectors.

For more details: Full Article

Have a Great Weekend!

| ❤️ Help us create something you'll love—tell us what matters! 💬 We read all of your replies, comments, and questions. 👉 See you all next week! - Bauris |

Trivia Answer: C) Hydrogen peroxide, baking soda, and dish soap

Tomato juice might seem like the go-to for skunk stink, but it only masks the smell temporarily. The true hero here? A magical mix of hydrogen peroxide, baking soda, and dish soap! This combo busts up the nasty sulfur compounds in skunk spray, actually eliminating the odor. Tomato juice just leaves you smelling like a skunky salad, but this mix makes you skunk-free!

How did we do this week? |

Reply